Aside from needing insane amounts of energy, data centers also need a lot of water to keep their AI systems running. Is this conducive to climate change?

Great and All, But…

Image Credit: Shutterstock / Gorodenkoff

You don’t have to be a technophile to realize that technology is booming, with new AI achievements springing up seemingly daily. But all those data centers, machines, and programs don’t run on empty air.

So, quick question: can the United States, particularly its power grid, keep up with demand as AI continues to evolve at breakneck speed?

Potent Power Usage

Image Credit: Shutterstock / Phonlamai Photo

For instance, did you know that one ChatGPT query requires almost 10 times more energy than an ordinary Google search? That’s according to a report by Goldman Sachs, which also informs us that generating a single AI image uses about as much power as it takes to charge your smartphone.

Not a New Thing

Image Credit: Shutterstock / NicoElNino

This isn’t a new problem, as 2019 estimates have already discovered that the amount of CO2 produced by five gas-powered cars is identical to training just one large language model.

A Tiny Difference

Image Credit: Shutterstock / Gorodenkoff

Dipti Vachani, head of automotive at Arm, says: “If we don’t start thinking about this power problem differently now, we’re never going to see this dream we have.” The popularity of the low-power processors produced by the chip company continues to rise, especially among big-name players like Google, Microsoft, Oracle, and Amazon. The reason? Because they can lower data centers’ power use by up to 15%.

A Bit Better

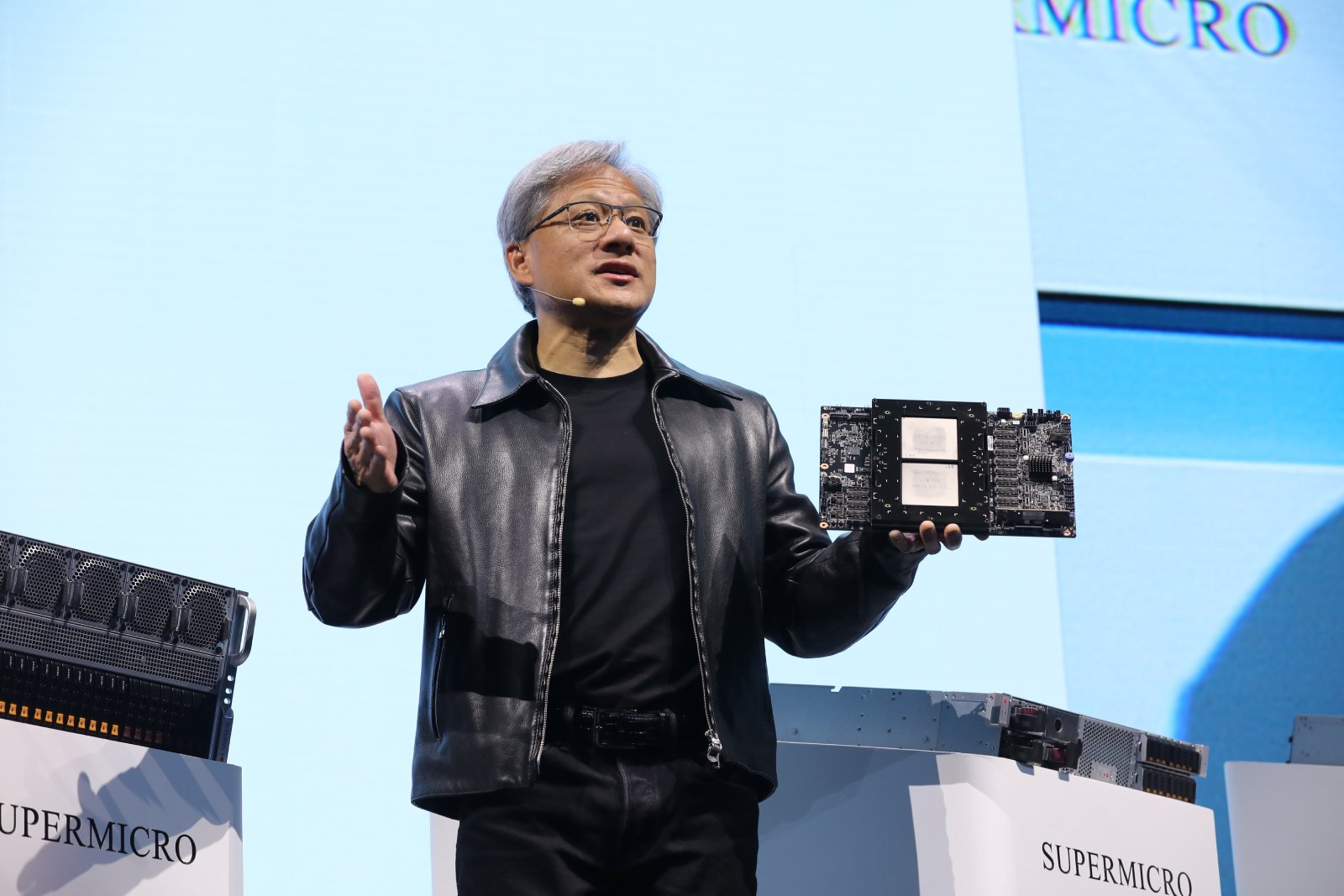

Image Credit: Shutterstock / glen photo

Even better, the GB200 Grace Blackwell Superchip from Nvidia supposedly uses 25% less power compared to previous versions to run generative AI models. As Vachani states: “Saving every last bit of power is going to be a fundamentally different design than when you’re trying to maximize the performance.”

Not the End-All Solution

Image Credit: Shutterstock / paparazzza

And yes, this approach of minimizing power use via enhanced computational efficiency, commonly known as “more work per watt,” is one way of fixing the AI energy crisis – but it’s not enough.

The AI Hub

Image Credit: Shutterstock / Korawat photo shoot

Currently, over 8,000 data centers are scattered around the world – and no prizes for guessing that most of them are in the US. But this number will be far exceeded within the next 10 years, thanks to AI.

More Needed?

Image Credit: Shutterstock / Gorodenkoff

According to Boston Consulting Group, every year until 2030 will see an increase for data centers by 15%-20%. That’s when they are estimated to make up 16% of North America’s entire power consumption.

Quite the Upsurge

Image Credit: Shutterstock / KeyFame

For comparison’s sake, this is an increase from a mere 2.5% in 2022 before the release of OpenAI’s ChatGPT. And its amount of power usage is enough to power about two-thirds of all the houses in the US.

No Surprise

Image Credit: Shutterstock / Gorodenkoff

Not surprisingly, AI companies are already experiencing hiccups. Speaking to CNBC, Vantage Data Center’s Executive Vice President Jeff Tench says there’s already a “slowdown” in Northern California due to a “lack of availability of power from the utilities here in this area.”

A Green Solution?

Image Credit: Shutterstock / kckate16

Tench adds: “The industry itself is looking for places where there is either proximate access to renewables, either wind or solar, and other infrastructure that can be leveraged, whether it be part of an incentive program to convert what would have been a coal-fired plant into natural gas, or increasingly looking at ways in which to offtake power from nuclear facilities.”

Not Done With Fossil Fuel Yet

Image Credit: Shutterstock / Fast Speeds Imagery

Speaking of lack of power, the electricity needs are so high in Kansas City that the plans to shut down a coal-fired power plant are being postponed – and that’s exactly where Meta is constructing a new AI-focused data center.

According to Tench: “We suspect that the amount of demand that we’ll see from AI-specific applications will be as much or more than we’ve seen historically from cloud computing,”

An Insane Amount

Image Credit: Shutterstock / urbans

Various big tech brands work with firms like Vantage for server storage. Vantage’s data centers, according to Tench, can use over 64 megawatts of power – about as much needed to light up tens of thousands of homes.

Set to Increase

Image Credit: Shutterstock / Gorodenkoff

“Many of those are being taken up by single customers, where they’ll have the entirety of the space leased to them. And as we think about AI applications, those numbers can grow quite significantly beyond that into hundreds of megawatts,” he added.

Gearing up the Grid

Image Credit: Shutterstock / Tong_stocker

But even when enough power is produced, it’s the outdated grid that often lacks the capacity to handle the demand. Moving the power from the source to the end user is where the issue occurs. A possible solution is to install transmission lines stretching for hundreds or even thousands of miles.

Who’s Paying?

Image Credit: Shutterstock / Yellow Cat

But according to Shaolei Ren, associate professor of electrical and computer engineering at the University of California, “That’s very costly and very time-consuming, and sometimes the cost is just passed down to residents in a utility bill increase.” Recently, a $5.2 billion plan to stretch lines to a region known as “data center alley” in Virginia was opposed by local ratepayers, who refused to cover the bill for the project.

What About Water?

Image Credit: Shutterstock / Ostariyanov

But we’re not done with the problems yet, because generative AI data centers also need water – as in 4.2 billion to 6.6 billion cubic meters by 2027 – to cool off the data centers, as per Ren’s research. That exceeds the entire water usage that half the UK uses in one year!

A Fix for Many?

Image Credit: Shutterstock / Butsaya

Fortunately for Vantage, their Plan B is to use large A/C units to keep their data center in Santa Clarita cool – although the company has also recently found a new solution via liquid for direct-to-chip cooling. As Tench puts it: “For a lot of data centers, that requires an enormous amount of retrofit. In our case at Vantage, about six years ago, we deployed a design that would allow for us to tap into that cold water loop here on the data hall floor.”

Enough for Everyone?

Image Credit: Shutterstock / Sharomka

According to Tom Ferguson, managing partner at Burnt Island Ventures, we’re focusing on the wrong issue: “Everybody is worried about AI being energy intensive. We can solve that when we get off our ass and stop being such idiots about nuclear, right? That’s solvable. Water is the fundamental limiting factor to what is coming in terms of AI.”

Missing the Deadline

Image Credit: Shutterstock / Pressmaster

And in the meantime, AI technology continues to increase its carbon footprint – just ask Google, who recently admitted in their newest environmental impact report that they are not on track to achieve net-zero carbon emissions by 2030, as they originally planned.

Oil Dumping Scandal Rocks Ships Heading to New Orleans

Image Credit: Shutterstock / Aerial-motion

Two shipping companies have been fined after knowingly hiding a large oil spill in the Atlantic Ocean. Oil Dumping Scandal Rocks Ships Heading to New Orleans

20 Eye-Opening Realities Facing Retiring Baby Boomers

Image Credit: Shutterstock / Jack Frog

As Baby Boomers approach retirement, the promise of leisure and security often seems unattainable. This generation faces unique challenges that could redefine retirement. Here’s a stark look at the realities shaping their outlook. 20 Eye-Opening Realities Facing Retiring Baby Boomers

Retail Apocalypse: Massive Closures Sweep Across U.S. Brands

Image Credit: Shutterstock / Tada Images

Stores across the U.S. are closing at unprecedented levels, according to new research from advisory firm Coresight Research. Read on for more information about the impact this could have on you and your communities. Retail Apocalypse: Massive Closures Sweep Across U.S. Brands

Featured Image Credit: Shutterstock / yelantsevv.